Smart Vision Systems for Pick and Place Robotics

- Blue Sky Robotics

- Nov 21, 2025

- 5 min read

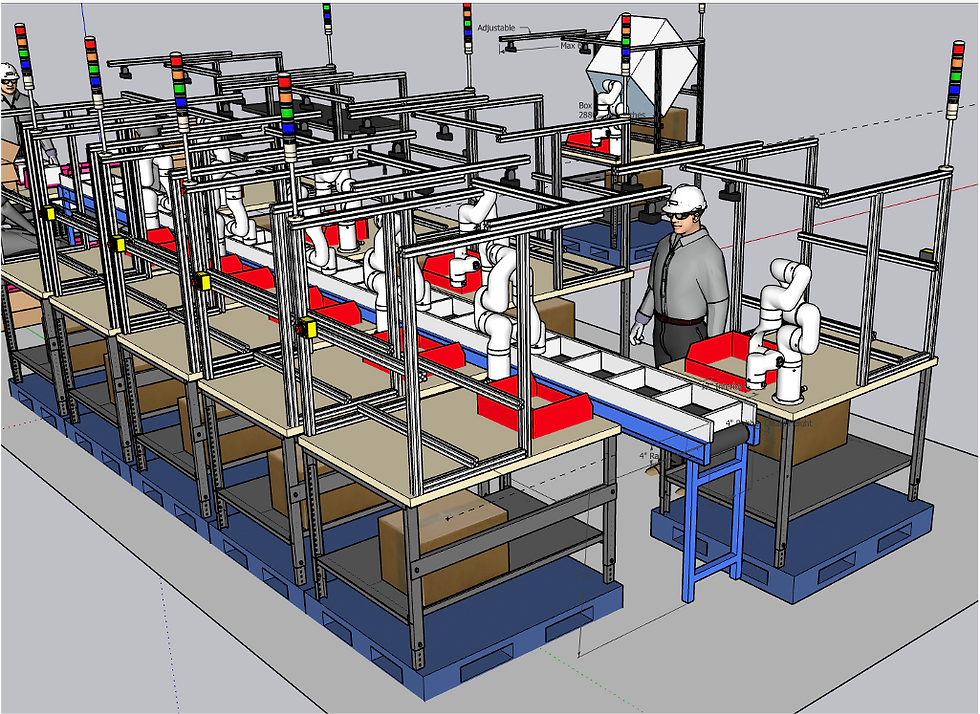

Smart vision systems are redefining how factories and warehouses handle small parts and complex assemblies by giving robots the sight and decision-making needed for faster, more accurate pick and place work. Moving beyond simple 2D imaging, modern solutions use 3D depth sensing, structured light and machine learning so robots can identify, grasp and relocate objects with human-like adaptability across variable orientations and cluttered scenes. For Blue Sky Robotics’ audience in manufacturing, warehousing and automation, that means higher throughput, fewer errors and greater flexibility when production requirements change.

Software tools such as Python and OpenCV now tie camera hardware to powerful image processing and AI-driven object recognition, enabling custom workflows, real-time quality checks and adaptive gripping strategies. You will read about vision hardware and sensing modalities, the differences between 2D and 3D approaches, software stacks and implementation best practices, plus deployment considerations and ROI examples — all framed around practical automation outcomes and a pick and place robot with camera use cases. First, we examine how smart vision systems integrate with modern pick and place robots to improve precision and throughput.

The Foundation of Vision Systems in Pick and Place Robotics

Modern pick-and-place cells rely on a layered sensing and processing architecture where cameras, depth sensors, and PLCs feed data into real-time image processing pipelines. Integrating 2D and 3D vision systems allows robots to capture both texture and spatial geometry so that object detection, pose estimation, and grasp planning operate on complementary data. High-frame-rate cameras and specialized sensors combined with optimized image-processing algorithms enable millimeter-level localization required for high-throughput assembly.

Accurate operation depends on careful calibration, controlled illumination, and mitigation of environmental factors such as reflections, dust, and vibration, because even small errors in camera pose or lighting can translate to failed picks. Software toolchains—commonly built with Python and libraries like OpenCV—perform distortion correction, segmentation, and AI-driven recognition to make raw sensor data actionable for the robot controller. Regular calibration routines and adaptive lighting systems maintain performance across shifts and product variants.

Fusing vision outputs with motion control closes the loop: real-time feedback refines trajectories, compensates for part variability, and shortens cycle times while preserving safety and repeatability. The move from 2D-only setups to 3D-capable vision gives robots human-like adaptability in identifying, grasping, and relocating complex parts, increasing precision, speed, and flexibility on modern manufacturing lines.

2D vs. 3D Vision Systems: Capabilities and Use Cases.

When deciding between 2D and 3D systems for pick-and-place tasks, engineers weigh the benefits and limitations of each approach to match application needs. 2D vision systems — typically camera-based pick and place robot with camera setups — excel at high-resolution color inspection, fast pattern matching, and low-cost deployment for well-fixtured, planar parts, and they integrate easily with smart vision systems to boost precision and cycle times. Their chief weakness is lack of depth perception, which makes them sensitive to lighting, part orientation, and occlusion without added fixtures or sensors.

By contrast, 3D cameras and depth sensors give robots a spatial understanding that enables reliable grasp planning, bin picking, and handling of irregular or overlapping objects, supporting human‑like adaptability in complex environments. Although 3D hardware and processing introduce trade-offs in accuracy, throughput, and budget, these systems are indispensable in mixed‑model manufacturing, assembly automation, and situations where depth-driven trajectories are required. Modern software stacks — from Python and OpenCV for image processing to AI models for object recognition — help bridge both modalities so smart vision platforms can deliver flexible, scalable pick‑and‑place solutions on the factory floor.

Integrating Python and OpenCV for Intelligent Vision Control

Developers routinely build vision pipelines using Python and OpenCV libraries to handle image acquisition, preprocessing, and feature extraction on modern pick-and-place robots, enabling fast, deterministic object detection in factory settings. Common computer vision algorithms—such as Canny edge detection, contour analysis, watershed and other segmentation methods, and keypoint descriptors like ORB or SIFT—are combined with deep-learning detectors (for example YOLO or SSD) to identify, segment, and classify parts robustly. These 2D techniques now pair with depth sensing and point-cloud processing to bridge the transition to 3D vision, improving grasp planning and reducing mispicks.

Open-source frameworks such as ROS, Open3D, and OpenCV’s calibration modules simplify camera calibration, coordinate transforms, and live data visualization, making it easier to integrate vision stacks into robot controllers and to iterate on system tuning. Machine learning further enhances adaptability and precision: transfer learning, real-time CNN inference on edge accelerators, and semantic segmentation models allow robots to handle new part variants, occlusions, and variable lighting with higher throughput and fewer manual rules. Together, these software tools create flexible smart vision systems that raise speed and accuracy in pick and place robotics while shortening deployment cycles.

Frequently Asked Questions

How does a pick and place robot use its camera to identify objects?

A pick and place robot with a camera identifies objects by first capturing visual data—either 2D images or 3D depth/point clouds—across the workspace so the system can detect shapes, colors and surface geometry and estimate each part’s position and orientation. Software pipelines built with tools like Python and OpenCV perform preprocessing (noise reduction, filtering and segmentation) and extract candidate features, which are then analyzed by AI models (CNNs for 2D images and point‑cloud or voxel networks and matching algorithms for 3D) to classify items and compute precise 6‑DoF poses. The detected object poses are transformed into robot coordinates for motion planning and grasp execution, and the shift from 2D to 3D vision significantly improves robustness, speed and human-like adaptability in cluttered or variable manufacturing settings.

Can OpenCV be used in industrial settings for robotic vision?

Yes—OpenCV is commonly deployed in industrial robotic vision because its mature open-source C++ core and stable Python bindings deliver production-ready, optimized image-processing building blocks with real-time performance and GPU acceleration, while the active ecosystem (OpenCV contrib) and stable APIs make integration into factory software stacks straightforward. Industrial scalability is further enabled by robot-friendly wrappers and toolchains such as ROS/cv_bridge for direct robot integration, GStreamer for camera streaming, Intel OpenVINO and TensorRT for inference acceleration, and PCL for 3D processing, so when combined with Python-based AI models OpenCV supports the move from 2D to 3D vision and lets pick-and-place robots with cameras achieve the precision, speed, and flexibility required on modern production lines.

What are the advantages of 3D vision over 2D in robotic automation?

3D vision gives robots true depth perception, letting them understand object geometry and spatial relationships so they can perform complex tasks—identifying, approaching, and grasping items with human-like adaptability that 2D images cannot reliably provide. This depth information improves accuracy in pick-and-place operations, especially with irregularly shaped or overlapping parts, and when combined with smart vision systems and a pick and place robot with camera it boosts precision, speed, and flexibility on production lines. Software toolchains using Python and OpenCV plus AI-driven image processing convert 3D point clouds into actionable models and real-time control commands, enabling robust object recognition, pose estimation, and seamless integration into modern automation workflows.

Emerging Technologies in Robotics

As we delve deeper into the 21st century, technologies in the realm of robotics continue to evolve at an unprecedented pace. This evolution symbolizes a transformative period in the industry that is poised to revolutionize various sectors, from manufacturing to healthcare. The integration of cobots and advanced automation software by Blue Sky Robotics is an illustrative example of the future-forward approach driving this progress.

In conclusion, the convergence of robotics, cobots, and automation software forms an era-defining triptych marking a new era in industrial, technological, and societal evolution. As we stand on the threshold of this exciting juncture, staying abreast of these developments and understanding their potential can steer us towards a future that looks exceedingly promising. Speak to an expert from Blue Sky Robotics today to learn more.