Navigating Robotic Structured vs Unstructured Pick and Place

- Blue Sky Robotics

- Nov 10, 2025

- 4 min read

Updated: Jan 14

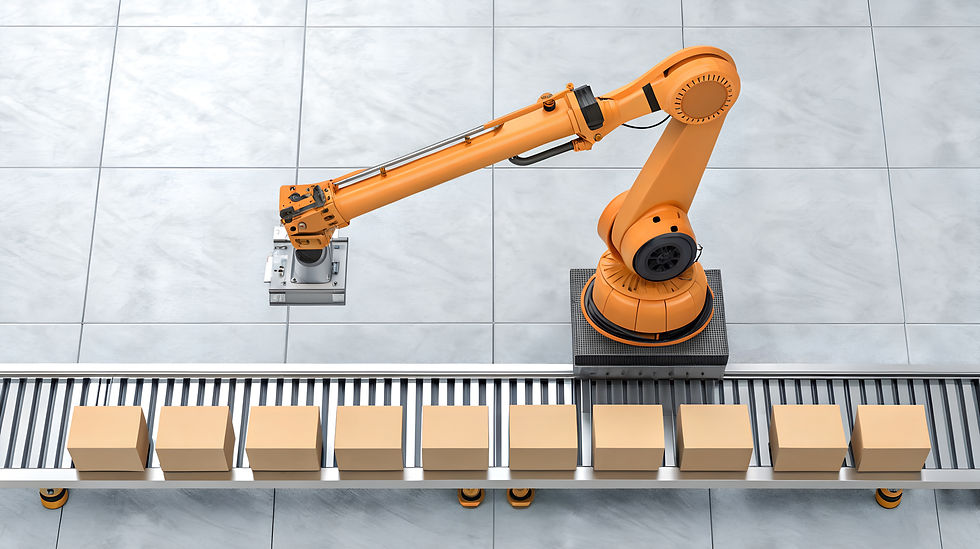

Robotic pick and place systems have become a foundational automation element across manufacturing, warehousing and logistics, where speed, consistency and safety are paramount. These systems range from simple conveyor-fed grippers to advanced robotic cells, and they help organizations reduce labor costs, improve throughput and maintain quality at scale, outcomes that matter to Blue Sky Robotics’ audience.

Comparing robotic structured vs unstructured pick and place clarifies how predictability in the work environment changes design, sensing and control strategies. The next sections will define structured versus unstructured tasks, examine enabling technologies such as sensors, AI and robotics software, and review real-world applications and deployment considerations so engineers and operations leaders can choose the right approach. Understanding the distinction between structured and unstructured tasks is the logical starting point.

Robotic Pick and Place Systems in Modern Automation

Robotic pick and place systems have become a foundational automation capability across manufacturing, warehousing, and logistics, where speed, consistency, safety, and scalability are no longer optional. These systems now range from simple conveyor-fed grippers performing repeatable transfers to advanced robotic cells capable of perception-driven decision making in dynamic environments.

As labor availability tightens and throughput demands increase, organizations rely on pick and place automation to reduce labor dependency, increase throughput, and maintain quality at scale—outcomes that directly align with the needs of Blue Sky Robotics’ audience. In 2026, pick and place systems are not just tools for efficiency; they are core infrastructure for resilient operations.

Structured vs Unstructured Pick and Place: Why the Difference Matters

Comparing structured and unstructured pick and place systems clarifies how predictability in the work environment fundamentally changes system design, sensing requirements, and control strategies.

Understanding this distinction is the logical starting point for engineers and operations leaders evaluating automation investments. The sections that follow define structured versus unstructured tasks, examine enabling technologies such as sensors, AI, and robotics software, and highlight real-world applications and deployment considerations to help teams choose the right approach.

What are Structured Pick and Place Systems?

Structured pick and place systems operate in environments where part position, orientation, and timing are known and repeatable. Common examples include high-speed packaging lines, electronics assembly stations, and dedicated production cells where throughput and consistency are paramount.

In these applications, robots execute tasks using fixed coordinates and preprogrammed motion paths that are taught once and repeated with minimal variation. This predictability enables:

High cycle speeds

Tight positional accuracy

Simple integration with conveyors and feeders

Strong overall equipment effectiveness (OEE)

As a result, structured pick and place systems excel in speed, precision, and reliability, delivering low error rates and stable output over long production runs.

However, this rigidity limits flexibility. Misfeeds, layout changes, or new product variants can disrupt performance because structured systems assume consistent inputs. In response, many manufacturers in 2026 are enhancing structured automation with machine vision, additional sensors, and AI-assisted path planning—adding perception and decision-making while retaining the core benefits of predictable workflows.

What are Unstructured Pick and Place Environments?

Unstructured pick and place environments are defined by uncertain object positions, variable orientations, and frequent part-to-part variation that invalidate fixed trajectories and rigid tooling.

Unlike structured cells, these environments demand perception-driven decision making and adaptable control. Sensors, AI, and robotics software must work together to maintain throughput despite variability common in modern warehouses, fulfillment centers, recycling facilities, and mixed-SKU processing operations.

Key challenges include:

Irregular object shapes that defeat conventional grippers

Lighting variability that complicates visual detection

Dense clutter and occlusion that obscure object boundaries

In 2026, modern unstructured pick and place systems address these challenges through AI-based vision and 3D sensing, fusing depth data with learned detection models to enable accurate pose estimation and robust grasp planning—even when objects overlap or partially obscure one another.

Real-world examples such as order fulfillment centers handling mixed products and recycling plants sorting heterogeneous materials demonstrate why adaptable perception, flexible end effectors, and resilient control software are essential for sustained performance in unstructured operations.

Key Technologies Enabling Adaptability in Pick and Place Robotics

Adaptability in pick and place robotics is increasingly driven by machine learning, which converts continuous operational data into improved decision-making over time. Rather than relying solely on fixed rules, modern systems refine their behavior using logged sensor data to:

Reduce misgrasps

Adapt to new part variants

Improve performance without exhaustive reprogramming

Complementing AI, advanced perception and tactile hardware—including 3D cameras, depth sensors, and force-feedback systems—provide the spatial and contact information required to interpret complex environments and support dynamic grasp planning.

Robotics software plays a central role by fusing perception, learning, motion planning, and motor control into a unified system. This integration allows robots to adjust force, change grasp strategies, and respond in real time to deformation or unexpected conditions, improving performance across both structured and unstructured pick and place tasks.

How to make Pick and Place Work with Collaborative Robots

This evolution naturally leads to collaborative robots, or cobots, which have become a key bridge between structured and unstructured automation in 2026. Cobots combine safety, flexibility, and cost-effectiveness, making them well suited for environments where humans and robots must work side by side.

Cobots enable:

Incremental automation without full cell redesign

Faster deployment and redeployment

Safer operation in shared workspaces

These characteristics reflect Blue Sky Robotics’ commitment to practical, scalable automation that aligns technical capability with real-world operational needs. As collaborative robots continue to advance, businesses can expect increasing levels of intelligence, adaptability, and human–machine synergy in pick and place applications.

Looking Ahead

In 2026, robotic pick and place systems are no longer defined solely by speed or repeatability. The ability to adapt, perceive, and learn now separates basic automation from competitive advantage.

By understanding the differences between structured and unstructured pick and place—and the technologies that enable each—manufacturers and logistics operators can deploy systems that meet today’s demands while remaining flexible for tomorrow’s challenges.

To explore how collaborative and intelligent pick and place solutions can support your operation, speak with an expert from Blue Sky Robotics and take the next step toward future-ready automation.